There are a handful of rock bands that are so rooted in our social consciousness most of us know who they are. I can think of a few, and they're all British, for some reason, which are The Beatles, The Rolling Stones, Pink Floyd, and Led Zeppelin. But there's one more we all know, The Who.

The Who - Pete Townshend, left, Keith Moon and Roger Daltry, center, and John Entwistle, right

The TV show, CSI, exposed millions to five of their most popular songs, but before that The Who toured the world extensively, were and continue to be played on the radio, and they sold over 40 million records world wide. There's also a fair amount of drama to their lives, as the two remaining members of the band, Pete Townshend and Roger Daltry, and their most recent drummer, Zak Starkey, son of Beatle, Ringo Starr, have appeared regularly in pop culture news.

There's the stories about Roger Daltry, who claims he's losing his hearing and sight, and suspects he's not long for this world. And Townshend goes on about his dislike of performing and touring with The Who. And Zak Starkey, who was fired from the band then re-hired, then supposedly told to inform the public he'd decided to leave the band on his own, but was actually fired again!

Lots of drama for a band that got its start in 1964, although the core members had been playing together since '61. Sixty plus years of drama for a seminal band, one that contributed significantly to the foundation of rock'n'roll, from the concept of rock operas, to instrument destruction, power chords, guitar feedback, intense concert volume, the use of synthesizers, and more.

Four members, Roger Daltry on vocals, Pete Townshend on guitar, vocals, and synthesizers, Keith Moon on drums, and John Entwistle on bass and horns. All providing intensely to the sound of one of the loudest and most innovative British rock bands to ever play. Each and every member of the band was unique and filled a role no other could.

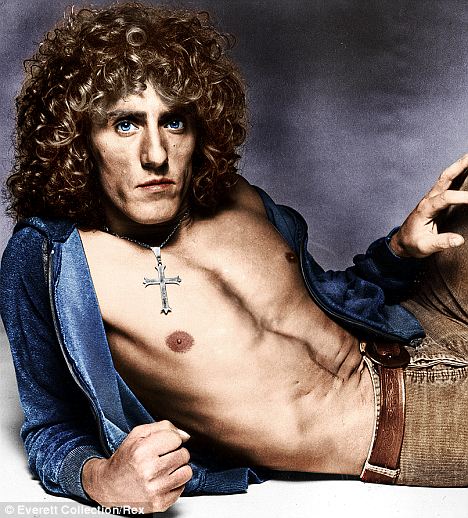

There's Roger Daltry, whose powerful voice and dynamic presence as lead singer brought to life the concepts Pete Townshend conceived. He became the deaf, dumb and blind man in the band's first hit album, Tommy, and no one could have better brought to life songs from the anthemic LP, Who's Next. Roger inhabited the characters Pete created and to this day, he's the voice of The Who.

Roger Daltry

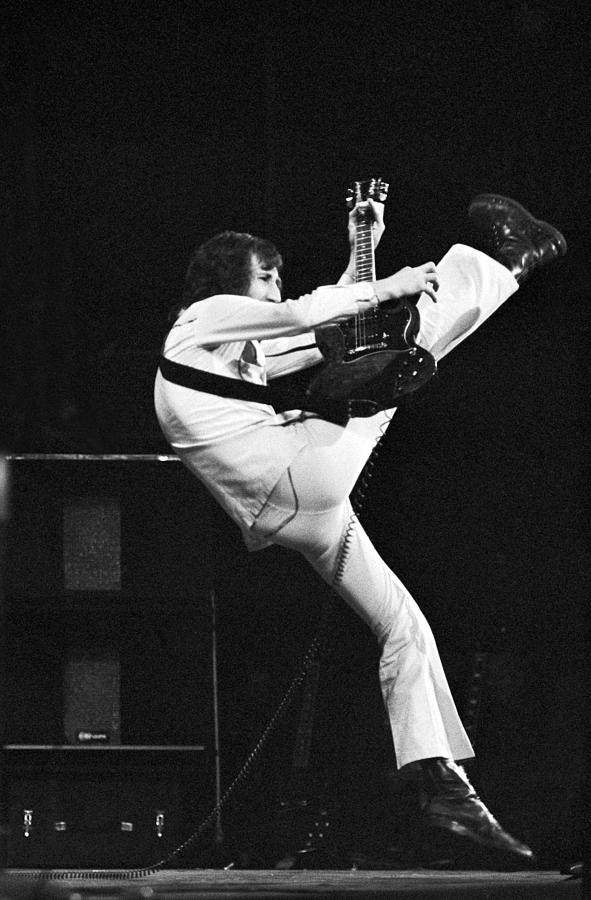

Then there's Pete Townshend, the principal song writer and composer of the band. The idea man, who used his background in art and pop to conceive of unique song and album concepts, including mini-operas like the song, A Quick One, While He's Away, to full blown operatic albums such as Tommy and Quadrophenia.

Pete Townshend

Pete is the guitarist whose power chords became a bedrock element of hard rock. He is also an innovator of amplifier feedback and distortion, one of a few key figures in British rock'n'roll in the early to mid 1960s that used the guitar to inject a rich component into psychedelic and hard rock.

He has also contributed lead and backing vocals throughout the band's long career, and his distinctive voice has always been a major differentiator of the band compared to their main rival, Led Zeppelin. He was an early adopter of the synthesizer, notably on the album, Who's Next, where its use became a foundational aspect of many of the band's greatest songs, including Baba O'Riley and Won't Get Fooled Again.

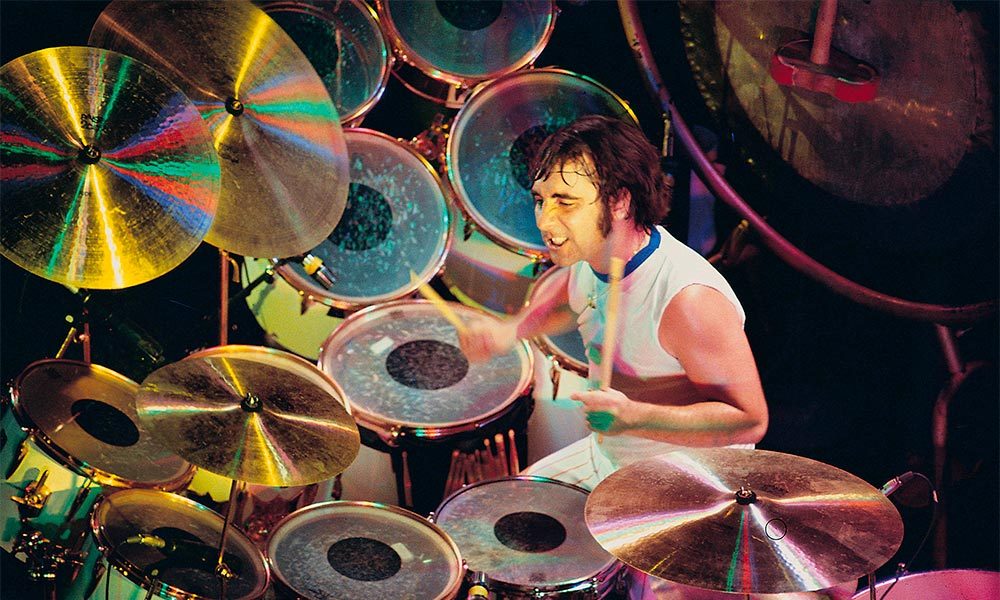

The original drummer, Keith Moon, with his unpredictable and wild style, where instead of providing a steady rhythm the band could follow, used his tom toms and cymbals as a lead instrument, constantly thrashing about, throwing in rolls and fills. He was dynamic and chaotic.

Keith Moon

And finally, bassist, John Entwistle, who also ignored his responsibility as a part of the rhythm section and played more like a lead guitarist than a traditional bassist. He played loud, treble-rich, and complex bass solos that anchored the band's unique sound but left Pete Townshend to hold down the rhythm.

John Entwistle

These four men became the inspiration for generations of bands, including U2, Rush, Cheap Trick, The Clash, Sex Pistols, The Jam, Oasis, Aerosmith, and more. Drummers, bassists, guitarists, and singers all over the world grew up on The Who's unique and powerful music, which influenced not only traditional rock'n'roll, but also hard rock, punk rock, heavy metal, and more.

So while The Who may be mostly a news item nowadays, two old men who rant and rave while their heritage has mostly passed them by, they are responsible for much of what we currently listen to.

Without The Who, rock would not be what it is today.

Looking for more information about technology and music? Visit the Gary Lucero Writer website and join my mailing list. I send out a bi-weekly newsletter that discusses technologies such as AI, rock and progressive rock music, and I sometimes delve into philosophical topics.

You can find me on Instagram, Facebook, and YouTube, and I host a podcast on a variety of sites, including:

Apple Podcasts

Podbean

Amazon Music

iHeartRadio

PlayerFM

Podchaser

If you want to reach me using e-mail, send me a message at contact@garylucerowriter.com.